Using Aria's Data Feed Web Service involves three key components of feed consumption:

- Getting your SSE client up and running

- Loading a snapshot of Aria data events via the Load Stream

- Consuming near-real-time Aria data events via the Change Stream

- Planning for data continuity

Getting Your SSE Client Up and Running

You must contact Aria to turn Data Feed on. When that happens, you will have endpoint information and can obtain an authorization code to connect to the Change Data Feed. Then, you can begin writing your SSE client script. Several pieces of example code, written in Python, are available to get you started on this process.

When you are satisfied that you have the scripts necessary to consume Aria data in the desired manner, you are ready to consume the Load Data Feed.

Loading a Snapshot of Aria Data

Events in the Load Stream all have the event type "load" and represent a current snapshot of a specified subset of Aria client data, such as "all account data," "all financial data," or "all usage data." To receive the Load Stream, you must submit a request to Aria Customer Support to launch an "extraction job" to generate a Load Stream with the desired data.

It is a good idea to coordinate both the Load Stream, and the Change Stream described below, to ensure that you capture all existing and modified Aria data.

Consuming the Aria Data Feed

Events in the Change Stream describe changes to Aria data in near-real-time. Each of these SSE events is identified as one of three event types: "create," "update" or "delete." These events map to an Aria data entity identified by the header (first) data line in the event. Update events include updated fields related to the data entity and may omit fields that have not changed.

Planning for Data Continuity

If you plan to start using Aria's Data Feed Web Service and already have a live instance of the Aria billing platform, your plan should ensure that you consume—

- a snapshot of existing data via the Load Stream

- live data events that occur while consuming the Load Stream (which can take up to several hours) via the Change Stream

Coordinating the Change and Load Stream

The Load Stream captures a snapshot of the data in your Aria instance, from the data existing when the Load Stream is started to the moment the latest event has been consumed. When run against a live environment, the Load Stream will—by definition—not capture events after that latest event (which, in a live environment, will likely occur after you initiate the Load Stream).

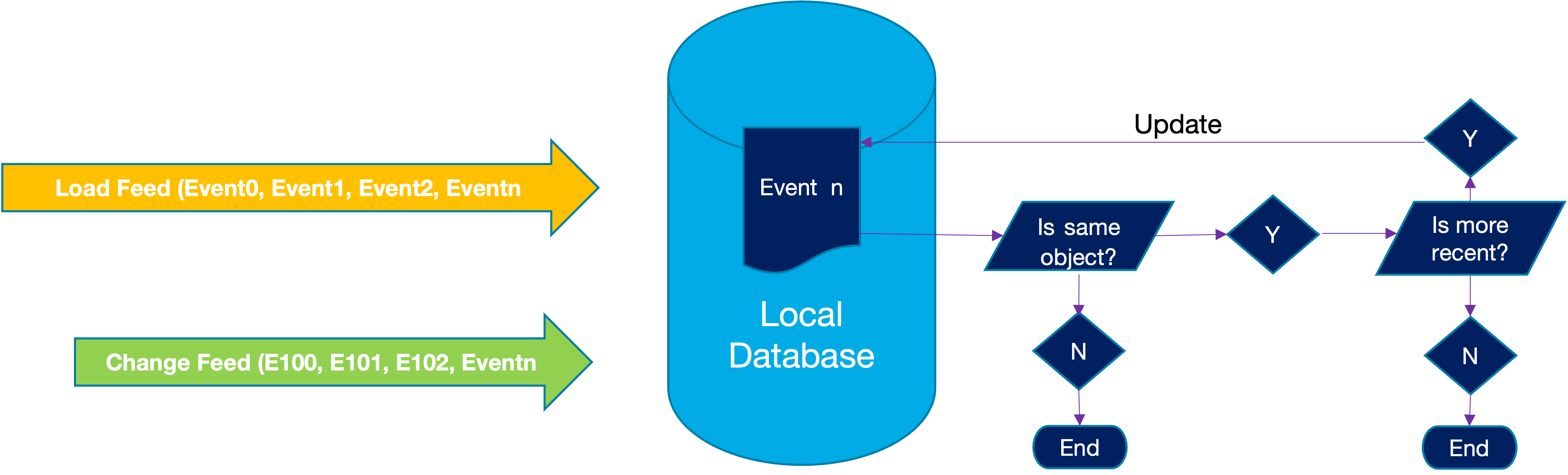

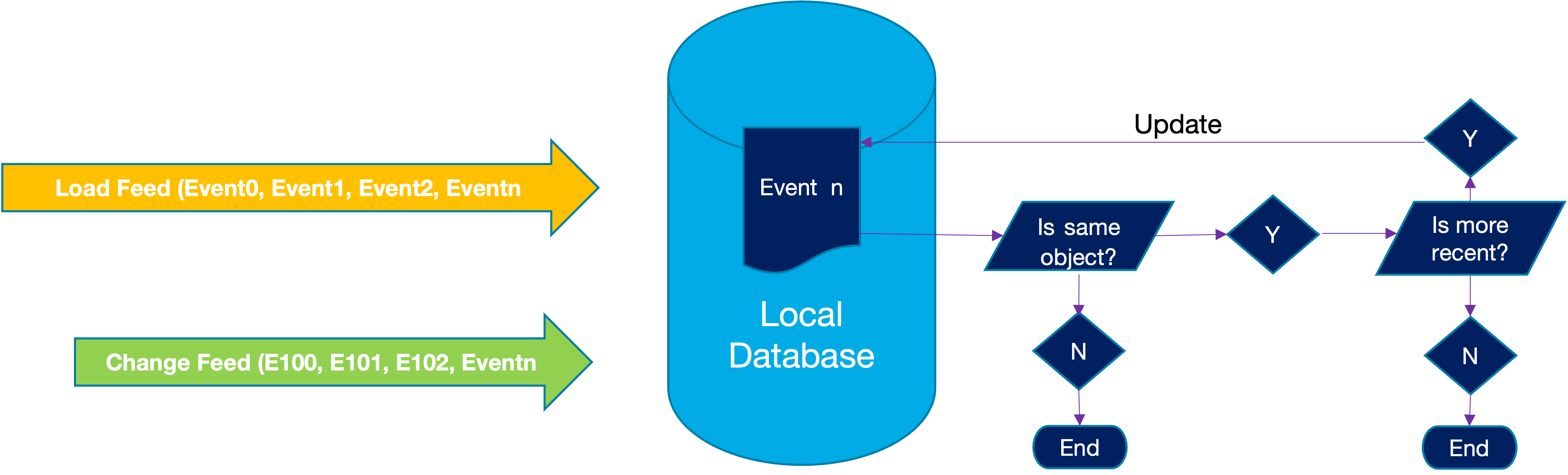

If you do not run your the Change Stream concurrently, there can be data gaps. As a best practice and to avoid these data gaps, Aria recommends following this procedure:

- Open an SSE client for both streams.

- As you receive load events, save that data to a local database, together with the load event's timestamp and tick found in the event header (the first of the two data lines).

- As you receive change events, check if the entity described by that event has been loaded in a load event yet. If it has, update your local copy accordingly.

- Once the last load event is received, you will have a full copy of the Aria dataset that corresponds to the time of that event.

How can I determine the order of events received in the two different feeds?

- You cannot compare times based on when your client app receives the events: Transmission speeds and the speed your client consumes events can vary.

- Events must be ordered based on the timestamp and tick and their event header. If both have the same time stamp value, use the tick as the tiebreaker.

Outages

Both the Change and Load Stream may be rerun as many times as you like to respond to connection outages, or to consume the data in a different way. Rerunning a stream will provide the same events with the same data.

The only constraint of Aria's Data Feed is that, because events are retired from the feed when their time limit elapses, you can only re-consume events back to the retention limit of 48 hours.